OpenAI introduced GPT-4 – the latest development in the line of artificial intelligence language models. The company describes it as more secure, creative and useful. Edition MC.today it was understood what GPT-4 is, why this AI model is called multimodal, how it differs from GPT-3.5, what limitations it has, and how to get access to it today.

What is GPT-4

GPT-4 is the latest AI (artificial intelligence) model from the OpenAI company, which became the fourth in the line of GPT language models and the first multimodal. This means that it can process not only text, but also other types of information, such as images.

“This is GPT-4, our most powerful and consistent model ,” OpenAI CEO Sam Altman wrote on Twitter on March 14. – From today it is available in our API (with a waiting list) and in ChatGPT Plus".

But, as it turned out, some users got access to the new technology much earlier. Microsoft Corporation confirmed that the latest versions of its Bing search engine secretly worked with GPT-4 even before the official launch of the model.

When Microsoft launched the new Bing, it was rumored to use GPT-4. So it is difficult to call it a sensation. Another point is interesting here: the corporation was not afraid to put its reputation on the line, which means it was completely confident in the possibilities and prospects of the new brainchild of OpenAI.

У reports GPT-4 technical characteristics of the model are not explicitly stated. Some mass media hastened to cite rumors that the number of parameters has increased from 175 billion to 100 trillion. However, OpenAI CEO Sam Altman himself calling their "complete nonsense".

"In casual conversation, the difference between GPT-3.5 and GPT-4 can be barely perceptible, — notes OpenAI in GPT-4 announcement. – The difference becomes apparent when the difficulty of the task reaches a sufficient threshold. GPT-4 is more reliable, more creative, and capable of handling much finer-grained instructions than GPT-3.5.”

One of the most interesting features of GPT-4 is its ability to understand images. For example, if you ask him what is happening in the picture above, he will answer: "a man irons clothes on an ironing board attached to the roof of a car" .

History of creation of GPT-4

The GPT-4 model did not appear out of nowhere. It is the latest generation of the GPT family. The abbreviation stands for Generative Pre-trained Transformer - a pre-trained transformer for text generation.

Transformer is not the name of a fantastic robot, but the architecture of a neural network, which was developed by Google researchers in 2017. It was the invention of the Transformer that brought AI development out of a state of stagnation.

The main feature of this architecture is its flexibility, scalability and ability to parallel data processing. Engineers from OpenAI were the first to prove that Transformer can use text generation.

Their language model GPT-1 easily surpassed all its predecessors in the ability to work with large amounts of information. In 2019, it was replaced by GPT-2 with a training dataset volume of 40 GB and one and a half billion parameters. And a year later she appeared GPT-3 with 175 billion parameters and data volume increased to 420 GB.

After additional training of GPT-3 with feedback from testers, the language model was given a GPT-3.5 index. In combination with a convenient interface, it became the same ChatGPT that earned the title of the most important innovation of the XXI century from Bill Gates.

To train GPT-4, OpenAI engineers together with the Microsoft Azure cloud computing platform developed a special supercomputer from scratch. It took 6 months to use it to train GPT-4 on an even larger dataset and adjust to the real-world human experience of using ChatGPT.

It should be noted that ChatGPT itself is not a version of the language model. And although it is often identified with GPT-3.5, it acts only as a way to interact with this model. And now in the Plus version it is also a way to interact with GPT-4.

What makes GPT-4 different from its predecessors

In order to better understand the difference between the chatbot we are used to and its improved version, we list five main differences.

GPT-4 can understand images

Multimodality allows GPT-4 to understand more than one "modal" type of information. All previous GPT models could only handle text. Unlike them, GPT-4 is able to analyze images and understand what is depicted on them.

Combined with increased logical abilities, this leads to amazing results. For example, GPT-4 not only understands that the image shows a huge iPhone jack, but can explain why it's funny! After all, for a long time, humor was considered an unattainable peak for II.

OpenAI's cooperation with the platform also looks promising Be My Eyes for blind people. The GPT-4 based application will allow blind and partially sighted people to get an audio description of everything their phone sees.

For example, if a user sends a photo of the inside of their refrigerator, the virtual assistant will be able to determine what is inside. In addition, he can describe the pattern on a dress, translate a label, read a card and explain how to get to the right department in a store.

GPT-4 is more difficult to confuse

OpenAI has done a lot of work to make GPT-4 more secure. As a result, the model responds to requests about prohibited content 82% less often than its predecessor. Answers to sensitive and ambiguous requests have also changed significantly for the better.

Meanwhile, the possibility remains that GPT-4 can generate malicious content. Conditionally, it can be divided into 5 risk groups.

- Tips that can lead to self-harm.

- Persecution, humiliation and hatred.

- Materials of an erotic nature.

- Information that may be useful in planning attacks or violence.

- Instructions on how to find illegal detention.

GPT-4 can remember more text during conversation

Large language models have been trained on millions of web pages. But when you try to enter a page of text into ChatGPT, it may turn out to be too much. Similarly, the chatbot response is often interrupted after 4-5 paragraphs.

The fact is that there is a limit to the amount of information that a language model can "keep in mind". By the way, we will tell you more about this wrote in the article on the integration of the GPT-assistant Google Docs.

For the old version of ChatGPT, this limit was 4096 tokens. This roughly corresponds to 8 English words, but many times less when using other languages. For example, in the English phrase "What is your favorite animal" system highlights 5 tokens. And in the Ukrainian phrase "What is your favorite animal" - 31 tokens.

In GPT-4, the problem is solved: the maximum number of tokens in a request has increased to 32768. This is about 50 pages of text. It is quite enough for a meaningful conversation with AI on any topic.

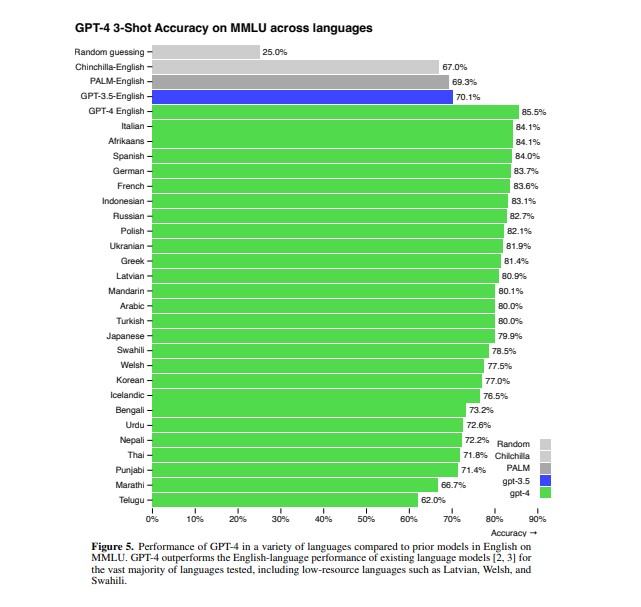

GPT-4 has improved multilingualism

Initially, ChatGPT responses in English were significantly better than those in other languages. Therefore, many users were forced to do double work: first translate the request into English, and then translate the response from English.

GPT-4 studied languages and took a step forward in this direction. Now answers in Italian, Ukrainian, Korean and 26 other languages are almost as correct as in English.

GPT-4 can change its behavior on demand

The developers have introduced an advanced controllability toolkit into GPT-4. It allows you to send through the API system messages that change the style of work of the AI, set the tone of its responses and set certain scenarios of human interaction.

GPT-4 can change the tone of responses and human interaction scenarios

For example, a system message might look like this: You are a tutor who always answers in the style of Socrates. You never give the student the answer, but you always try to ask the right question to help him learn to think for himself.” .

Limitations and disadvantages of GPT-4

"GPT-4 is generally unaware of events that occurred after September 2021 and does not learn from its experience, - writes OpenAI. – Sometimes it can make simple logical errors or be gullible in accepting obviously false claims from the user." .

GPT-4 is also still socially prejudiced, prone to hallucinations and hostile cues. However, the developers aim to expand the opportunities for people to participate in the formation of the model and encourage them to more actively evaluate successful and unsuccessful AI responses in the chat window.

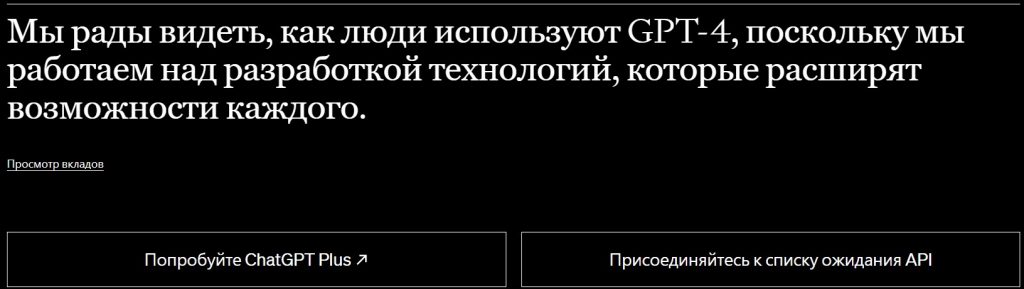

How to access GPT-4

Today, there are two ways to access GPT-4. First, it is available to paid OpenAI users through a monthly ChatGPT Plus subscription (with limited usage). The cost of the service is $20.

Second, developers can register on a waiting list for access to the AP of the new language model. The price of using it is $0,03 for 1 "request" tokens (about 750 English words) and $0,06 for 1 "completion" tokens.

Recall that request tokens are the parts of words you pass to GPT-4, and completion tokens are the contents of the GPT-4 response.

Opportunities and prospects

OpenAI is already working with a number of companies that have integrated GPT-4 into their products. For example, Stripe uses GPT-4 to crawl sites.

Duolingo has built the latest AI model into its new language learning subscription tier. Morgan Stanley is building a system based on GPT-4 that will extract information from company documents and provide it to financial analysts.

There will be more such programs in the future. And ordinary users will get a more powerful and safer GPT assistant, which understands jokes, can remember long conversations, replace tutors for children in any subject and become other eyes for people with visual impairments.

"We hope that GPT-4 will become a valuable tool to improve people's lives by supporting many programs, - writes OpenAI. – There is still a lot of work to do and we look forward to improving this model through the collective efforts of the community.” .