Over the past six years, artificial intelligence technology has gained enormous popularity. Developments in this field can revolutionize and change our daily lives. Against the background of the hype surrounding AI, there are also concerns about the ethical implications of its development.

According to some experts, the risks are not limited to potential disinformation attacks and go beyond generative services, spreading to almost all spheres of human activity.

Edition ForkLog considered the main concepts of ethical AI and its safety.

- The debate about the ethics of artificial intelligence has continued since the technology's inception.

- Algorithms that interact with humans must be checked for risks in accordance with generally accepted standards of morality.

- Artificial intelligence does not need to be made to resemble human intelligence.

- Scientists, tech giants and governments agree on the basic principles of ethical AI.

Definition of AI ethics and history of the term

Ethics itself is a philosophical discipline that examines morality and ethics. In other words, it explains what is good and what is bad.

The ethics of artificial intelligence, in turn, is a set of principles of responsible AI — what it should do and what it should not do. These principles often involve safety, fairness and human-centeredness.

Writers working in the genre of science fiction were among the first to raise the topic of "machine morality". In their works, they reasoned about the behavior of robots, their mind, feelings and interaction with humans. This trend was called "robotics".

The popularizer of the phenomenon is considered to be Isaac Asimov, the author of the short story "Horovod". In it, the writer formulated the "Three Laws of Robotics" for the first time. Later, in the novel Robots and Empire, Asimov added the zero rule to the list. They sound something like this:

- A robot cannot harm humanity by its action, or allow humanity to be harmed through inaction.

- The robot cannot cause harm to a person by its action, or allow a person to be harmed due to inaction.

- A robot must obey human orders, except when those orders conflict with the First Law.

- A robot must protect its own safety as long as it does not conflict with the First or Second Law.

Asimov's laws gained popularity and went far beyond his literary works. They are still discussed by writers, philosophers, engineers and politicians.

According to a number of critics, such norms are difficult to implement in practice. Fiction writer Robert Sawyer has said that business is not interested in developing native security measures.

However, the transhumanist Hans Moravec has the opposite opinion. According to him, corporations that use robots in production can use a "sophisticated analogue" of these laws to manage the technical process.

Government structures also used the rules formulated by Asimov. In 2007, the South Korean government developed the "Statute of Ethical Standards for Robots." The main provisions of the document resemble the postulates set forth by the writer.

In connection with the use of robots in the military sphere, the problem has become more urgent. In 2021, the UN recorded the first killing of a human by an artificial intelligence drone that independently identified the target, tracked it and decided to eliminate it.

After the popularization of generative algorithms such as GPT or DALL-E, discussions about the ethics of artificial intelligence have expanded. Governments are debating the potential risks and developing plans to regulate AI technology, and tech giants are actively developing internal teams to create safe AI.

"The trolley problem"

The development of technologies that directly affect people's lives gives rise to a number of ethical and moral dilemmas. They can be described most vividly on the example of unmanned cars: how should a robocar act in an emergency situation? Endanger the life of a passenger or pedestrian?

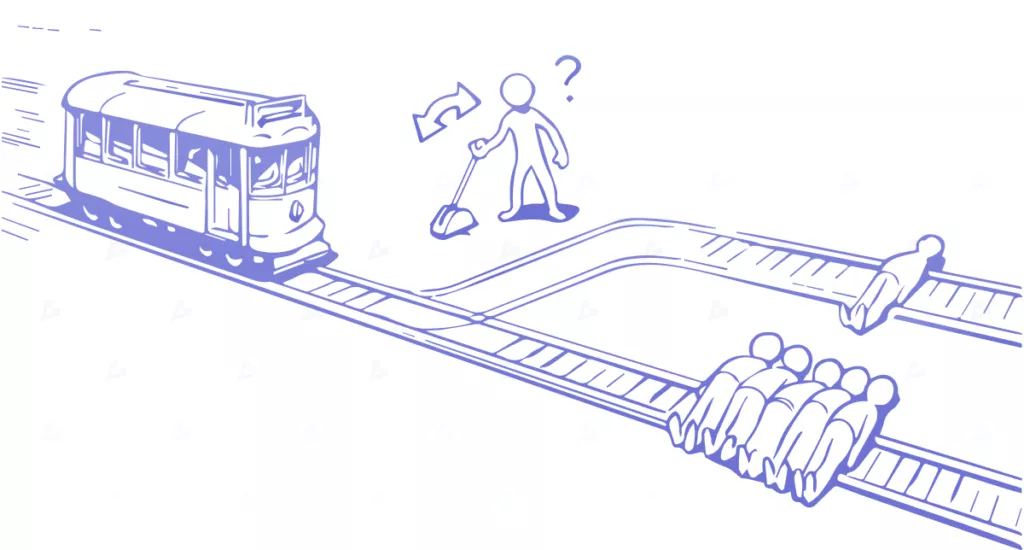

This dilemma is well illustrated by the "trolley problem", which was formulated in 1967 by the English philosopher Philippa Foot. The general content of the task is as follows:

"The trolley has lost control and is speeding along the railway track. Ahead, five people are tied and immobilized on the tracks. You are standing at a certain distance from the scene near the arrow. If you move the arrow, the trolley will rush along the next track, but one person is tied there.

You have no ability to influence the situation. There are only two options: do nothing, and the trolley will kill five people, or move the arrow and sacrifice one life. Which option is correct?

Most moral principles can be reformatted into similar tasks and run a series of experiments. In real life, there may be many more options, and the consequences are less tragic. However, the choice made by AI will affect life in one way or another, and all risks cannot be excluded.

AI should not learn from humans

Despite the fact that moral and ethical principles are formed by a person, AI should not be like people, because the biases inherent in society can penetrate the algorithm.

This is well illustrated by face recognition technology. To create relevant systems, a large set of data with the image of people is collected. Often the process is driven by automated bots that don't take into account sample diversity and context. As a result, the dataset may initially appear biased.

The next stage of development is model building and training. Biases and distortions can also creep in at this stage, including through the "black box" problem. Engineers simply don't know why the algorithm makes this or that decision.

Risks of bias exist only at the level of interpretation. However, at this stage, a person already plays a greater role. For example, an algorithm was trained on a certain sample of people and got specific results. Then they applied the system to another group — the data changed dramatically.

In 2021, Dutch activists proved the presence of distortions in the social assistance distribution system. According to them, its use violated human rights. As a result, the municipal authorities refused to operate the software.

Developers also report a problem with data transfer. In March 2023, a group of researchers developed an algorithm that predicts the risk of pancreatic cancer. To train the system, researchers mainly used medical records of patients in Danish clinics.

The scientists warned that the accuracy of the algorithm when projecting on patients from the US is significantly reduced. Because of this, the algorithm should be retrained on the data of Americans.

Popular principles of ethical AI

Research groups, human rights advocates, tech giants and governments offer different approaches, but they all share a number of similarities. Let's consider the most popular opinions.

Justice and non-discrimination

AI systems must be designed to minimize discrimination based on race, sex, religion or other protected characteristics. Biases in algorithms can reinforce existing inequalities and harm certain groups.

For example, facial recognition systems have shown bias in their accuracy with higher error rates for certain racial and gender groups. In 2019, a black resident of the state of New Jersey (USA) spent 10 days in prison due to an algorithm error. Similar cases happened in other cities, for which the police faced a wave of criticism due to the use of biased technologies.

Ethical AI techniques can help identify and mitigate such biases, promoting fairness in decision-making.

Transparency and clarity

Transparency and understanding are critical to building trust in AI. Systems must be designed in such a way that their decision-making processes and outcomes are understandable to all interested parties.

Developers of generative systems are considering the possibility of using watermarks to mark content created by algorithms. They believe this will increase user awareness and prevent the spread of misinformation.

Another example of transparent use of AI is the law of the state of Illinois (USA) regarding the privacy of biometric data. The document prohibits businesses from using technologies like facial recognition without explicitly notifying users.

Companies like Google and Clearview AI have already faced multimillion-dollar fines or business restrictions under the law.

Proponents of this approach believe that transparency promotes responsible decision-making and prevents misuse of AI systems.

Accountability and responsibility

Stakeholders must take responsibility for the development and use of AI systems and their potential impact on people, society and the environment. They need to ensure the beneficial use of technology.

This includes considering the ethical implications of implementing AI systems and actively participating in decision-making throughout the development and deployment process.

Privacy and data protection

Artificial intelligence systems often use personal information, and ensuring privacy is an important factor. Ethical AI practices include obtaining consent for data collection and use. It is also necessary to provide control over how service providers dispose of user information.

This ensures compliance with the rights to privacy and protection of personal information, experts believe.

For this reason, ChatGPT was blocked in Italy at the end of March 2023. The local regulator accused the developers of illegally collecting personal data, which forced OpenAI to introduce more transparent working methods.

Presumably to avoid the same problems, the global rollout of Google's Bard chatbot bypassed not only the EU, where GDPR applies, but the entire European continent.

People-centeredness

Ethical AI is based on human-centeredness. This means that algorithms should be developed to enhance the capabilities of people, and not to replace them.

The principle includes considering the potential impact of AI on jobs, social dynamics and general well-being, as well as ensuring that the technology is aligned with human values.

Business and law

Adherence to AI ethics is responsible behavior. They are also necessary for commercial gain. Ethical issues can be business risks, such as product failures, legal issues, or brand reputational damage.

In theory, Amazon's use of delivery tracking algorithms could keep drivers safer. However, the employees were unhappy with the abuse of the surveillance, which caused an uproar in the media, and the top management had to make excuses.

Meanwhile, the Screenwriters Guild announced its largest strike in 15 years, partly related to the spread of generative AI. Authors demand to ban or significantly limit the use of technology in writing and to revise salaries.

It is not known how the current protest will end. However, the 2007-day strike in 2008-2,1 cost the California budget $XNUMX billion.

One of the reasons for such events may be the uncontrolled development and use of algorithms. However, individual countries and even cities apply their own approaches to the moral side of the issue. This means that companies only need to know II rules at the level of the jurisdiction in which they operate.

Clear examples are provisions "Right to explanation" in the GDPR and relevant sections of the California Consumer Privacy Act.

Some American cities at the local level have decided to limit the use of algorithms. In November 2022, New York City banned biased AI recruiting systems. Prior to that, a resolution obliging private institutions to ensure the confidentiality of visitors' biometric data entered into force in the city.

Ethical AI is essential in technology development and implementation. It can ensure fairness, transparency, confidentiality and human-centeredness for the favorable development of society.

In addition, the application of the guidelines can provide companies with commercial benefits, in particular by preventing anxiety among employees, consumers and other interested parties.

Controversies and debates around AI can turn into a constructive dialogue. Excessive regulation can hinder the development of technology and destroy untapped potential. Therefore, there is now a need to find proven solutions in the field of ethical use of AI that will satisfy the maximum possible number of people.

To always be aware of the most important things, read us at Telegram